PointNet Adaptation to Object Oriented 3D Bounding Boxes

- Raymond Danks

- Jul 5, 2020

- 5 min read

PointNet is a novel neural net architecture which accepts raw point clouds instead of preprocessed data, which is garnered from the point clouds. Originally, this system was designed for classification and segmentation - I adjusted PointNet so that it could be used to regress object oriented bounding box parameters. As an extra innovation to my system, I created a custom method to include the normals with the point cloud data, to aide the identification of realtionships between individual points. My implementation includes Convolutional Neural Networks (CNNs), Max Pooling, Point Cloud Manipulation, Matplotlib visualisation, Custom Dataset Creation and Manipulation and subclassing for custom models and layers, extending my knowledge in all of these areas.

My implementation can be seen below (using Jupyter's nbviewer) and on my GitHub here:

Scroll bars are given at the bottom of each cell below, although the above GitHub repository allows for better viewing and formatting of the implementation.

A theoretical and practical justification of PointNet and the adjustments I have made to it, as well as other innovations will now be undertaken.

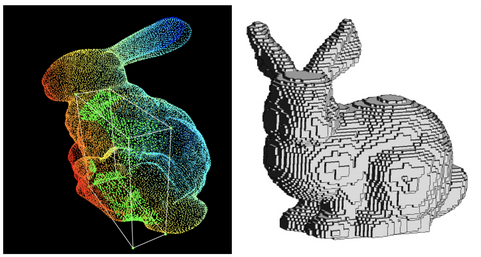

Firstly, neural networks excel at forming and representing high level, complex relationships between features and hence its use for Point Clouds is intuitive. However, point clouds are extremely dense (due to the frequency of sensors which detect them), which means that much of the data may represent noise and the like. Moreover, point clouds are highly irregular formats - the number of points in a point cloud has no upper or lower limit and in many instances can even depend on scanning technique. This is problematic for neural network systems which require constant input sizes and can be thrown off by unknown correlations between input data features. In recent times, in order to solve this problem, the Point Cloud was converted into 3D voxels - these are ”solid” volumes which when built together accurately represent the shape which is being represented by the point clouds. This allows for neural networks to constantly input the 3D voxels into a system to assess a mesh. The issue that the PointNet authors saw with this system is that since these voxels are of finite size, they make assumptions on the structure of the surface of the subject and hence add unnecessary volume to the structure. This is not dissimilar to modelling a curve with a set of linear lines - with a small number of lines, the amount of data lost from the curve to the linear line model will be large, whilst if the number of linear lines was infinite, then the linear system would exactly model the curve. The 3D voxel approach is in between these two extreme cases. The image below illustrates this well:

As can be seen above, the data from the surface of the subject is far more detailed (showing intricate curves) than that of the voxelised subject. Therefore, by using the raw point cloud, more data can be retained and input into a network. The PointNet network seeks to process complex raw point clouds by providing invariability from permutation (both individual point-wise and from rigid transformations) and to effectively process unordered sets. These three points are what often makes point clouds neglected by researchers - a point cloud in any order still represents the same mesh (ie the scanning could start from anywhere and hence there is no reason why the first point recorded has to be from a specific point in the mesh) and hence the point cloud is seen as an unordered set. Moreover, if any rigid transform (or adjustment on an individual point - creating an outlier) is conducted on the point cloud, then it still represents the same exact mesh, since the angle of any scanner is not necessarily fixed. It is solving these permutation invariances and processing the point cloud as an unordered set which allows PointNet to assess raw point clouds.

PointCloud uses symmetric functions (Max Pooling functions) in order to solve this unordered set issue and derive context from raw point clouds (other methods such as RNNs were also considered to solve this issue). It also used Transformation Networks (T-Nets) in order to solve the perumtation invariance issue.

Initially, PointNet was implemented to complete two tasks: Classification and Segmentation. There are indeed two separate "branches" of the network for these two independent tasks, seen below:

I implemented both of these networks individually on the way to the final version which I created. The idea behind this project was that I would use PointNet and its insight into the features and context of point clouds in order to regress oriented bounding box parameters.

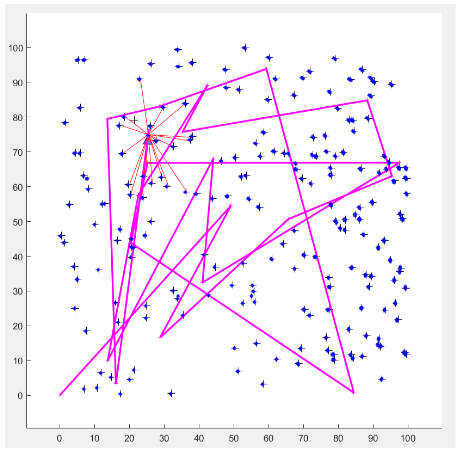

Firstly, I needed to create a dataset for oriented bounding boxes - to do this I used generic meshes from the Stanford 3D Scanning Repository and fit (axis-oriented) bounding boxes around them. After this, I iteratively rotated the meshes and the bounding boxes in all axes, in order to assetr that the bounding boxes must be object oriented. This dataset was set in another separate GitHub Repository, so that it can be used in easy collaboration with Google Colab. An example of the dataset can be seen below:

Since the extra pooling/symmetric functions in the segmentation section of the network allows for the analysis of individual points' relationship to the entire mesh. It was deemed that the individual point features (shown within the segmentation network on the defining diagram) would only increase the accuracy of a bounding box fitting and in fact may be a necessary prerequisite to regressing the bounding box parameters, since what defines a bounding box is how each point relates to the overall cloud (eg are they all fit into one clump with just one outlier etc, which highly affects the accurate and usable box fitting). Therefore, the segmentation network was used as the main process power in this system. In order to have a regressive output from the segmentation network, another symmetric function (pooling layer) was used after the point features were derived. After the pooling, the network features a sequence of fully connected layers, which regresses bounding box parameters from the individual point features and overall from the input point cloud.

Moreover, within the PointNet paper, it is suggested (although not implemented) that including extra data, such as colour and normals from the point cloud can allow for further interesting relations to be made between the points and create better results. Therefore, I created a system to add the normals data into the point cloud. The struggle was that the point clouds for this system were uniformly sampled from existing meshes, so that a uniform number of points was selected - this means that there are not necessarily known normals for each vertex, since they are sampled. Therefore, an additional way to relate vertices to one another using normal vectors was theorised. Each point within the point cloud is sampled uniformly from a particular face in the mesh; therefore, if the normals of this face are found, then this will have an average relation to all points sampled from that face (if an infinite number of points were sampled on that face, the face normal would be the resultant average vertex normal). Therefore, this system was created and used to add more information to the point cloud vertices individually and should therefore lead to more accurate oriented bounding box fitting.

Comments